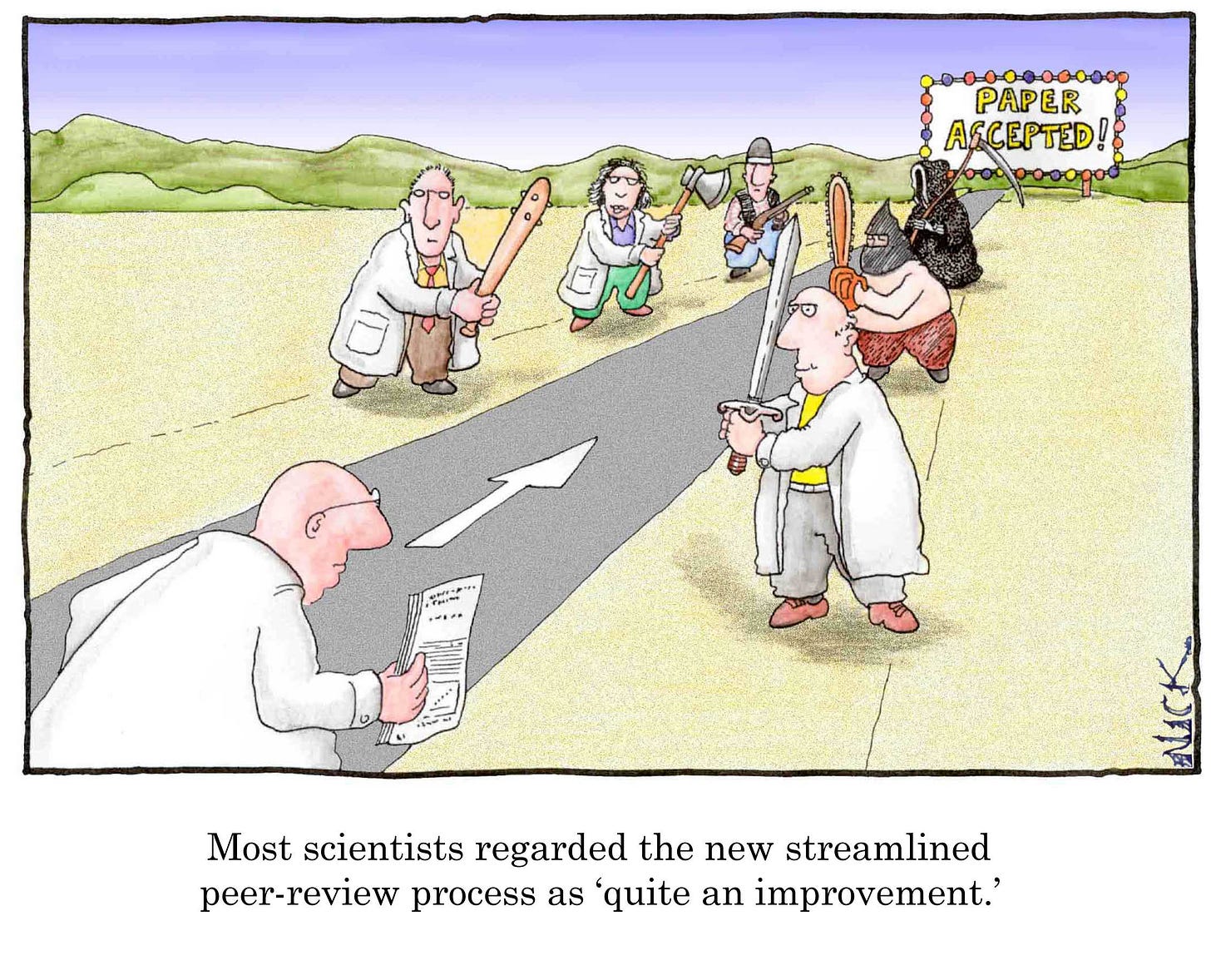

Pretty sure I know the one with the chainsaw and the one in the Grim Reaper getup. Because I nominated them.

[Image source].

Got past a couple of milestones on the route to future book sections recently. One was submission of a revised version of a paper I/we have been working on for nearly a year. The other was an initial submission of another study we’ve been working on for six months or so.

There’s an order-of-operations to producing book sections on this site that I pretty much have to follow: first the original research appears in the peer-reviewed literature, then I can selectively draw from it, recombine it, reduce it and translate into stuff normal people can actually use. There are a couple of reasons for this order of events. One is quality-assurance - the underlying science behind what I’m writing here is vetted for quality and rigor by professional peers. That gives standing to guidelines and recommendations made in these book sections.

The other reason is that journals are extremely fussy about getting to claim first dibs on publishing new knowledge and analysis. They get real mad - like, lawyering-up mad - if they find out something they want to be the first to publish has been published somewhere else previously. I need to stay in at least the minimal good graces of the big academic publishing cartels (at least for now). So I have to be a little cagey about what I share here and when I share it.

Some readers might not be familiar with the academic publishing process so I thought I’ve give a brief rundown of how it works and why the pace of things often seems so glacially slow. This is not all complaint on my part - some steps of the process, though tedious and painstaking, can and actually often do result in a substantially better product. Take the case-study of the paper for which I just submitted a big revision. I’ll recap the paper’s history for you:

This paper is called Leveraging DOM UV absorbance and fluorescence to accurately predict and monitor short-chain PFAS removal by fixed-bed carbon adsorbers. Let me work backwards to unpack the title:

“Carbon absorbers” - a generic way to refer to biochar or granular activated carbon (GAC). The study was done with biochar, but the results are applicable in a GAC context as well.

“Fixed-bed” - engineering-speak for granular media, in a tank, that stays stationary while you flow liquid (or gas) over it. The fluid flows, the media doesn’t.

“Short-chain PFAS” - a subset of per-/poly-fluoroalkyl substances (PFAS) whose carbon backbone is shorter than the more well known “legacy” PFAS pollutants like perfluorooctanesulfonatic acid (PFOS) and perfluorooctanoic acid (PFOA). Short-chain PFAS are smaller, more water soluble, less well characterized in terms of occurrence, environmental fate, and toxicity, and more difficult to remove by adsorption than larger PFAS molecules. Our sentinel PFAS PFBS and GenX are classified as short-chain and were included in the study.

“Monitor…” Challenge #1 addressed in the paper - monitoring PFAS removal in carbon adorer treatment systems. Measuring PFAS in water (at their typical ng/L levels) is complicated and expensive. Only a few labs around the world have the analytical capabilities and expertise to do so and it costs an arm and a leg. Spectroscopic water quality assessment methods such as measuring ultraviolet (UV) absorbance and fluorescence are comparatively very inexpensive. These methods don’t measure PFAS, but are sensitive to different components of background dissolved organic matter (DOM) - which causes fouling of the adsorbent and therefore relates to how well the adsorbent can remove target PFAS compounds. In the paper we show how monitoring UV absorbance and fluorescence can be used as proxy measures to assess carbon adsorber performance for PFAS removal.

“Predict…” Challenge #2 addressed in the paper - rapid laboratory bench top experiments are unreliable for accurately simulating removal of PFAS (and other trace pollutants) by full-sized real-world treatment systems. The reasons is that small lab bench experiments don’t adequately capture the effects of DOM fouling as they occur in real-world systems. In the paper we show how incorporating measures of UV absorbance and fluorescence, which relate to DOM fouling, with measures of PFAS provides a more complete picture of overall adsorption behavior in complex solution mixtures - and this allows accurate prediction of real-world system performance from rapid lab bench studies.

So we overcame decades-long challenges to obtain accurate simulations of real-world treatment systems from rapid benchtop experiments, and provided a cheap and field-ready way to monitor treatment performance for removal of really-difficult-to-treat water pollutants of serious emerging concern. Friggin awesome y’all.

The backstory of this paper is as-follows: All the data were collected by my friend/colleague/former grad student Myat Thandar Aung during her thesis research in the University lab, and she is first-author on the paper. She’s now back home in Burma and has been living through hell for the past year under a violent military coup. Given her situation I took the lead organizing the effort to assemble the paper, but the work is hers and she deserves the lion’s share of the credit.

I started pulling things together last March. As I dug in, I quickly realized I needed to recruit some help from my friend/colleague/former lab-mate Dr. Kyle Shimabuku now of Gonzaga University to analyze and interpret the fluorometry data. Kyle has a lot of experience using UV absorbance and fluorescence to characterize DOM and understand how it contributes to adsorbent fouling and affects removal of target organic micropollutants.

I roped Kyle into the project and we worked on the data and writing for about two months. By mid-summer we had a manuscript together and submitted it to one of the top journals in our field.

Well, whoever the handling editor was who received our submission didn’t like it. I don’t think they really read it at all, certainly did not read anything carefully or closely. We received a blithe, dismissive “desk reject.” A “desk reject” is when an editor takes one look at your paper and says “Nope,” and doesn’t bother sending it out for review.

So on the basis that our paper did not get fair consideration I wrote an appeal to the journal’s editor-in-chief. The EIC agreed to have a look, and replied that the work did seem to have substantial merit, but he felt that (1) the document needed some restructuring to improve readability, and (2) we hadn’t done enough to underscore the importance and novelty of the work.

I find this second point interesting, and honestly, a bit of helpful criticism. Essentially, the EIC was saying “You need to punch this writing up a bunch.” I’ve gotten versions of this same feedback a few times in papers I’ve worked on over the past two or three years. I don’t know if this is a trend or what. My approach to scientific writing is to play it pretty conservative, always being careful not to overstate things, equivocating and stressing uncertainties and unknowns where necessary, etc. It doesn’t make for very punchy writing. I’ve had to do some revisions based on this kind of editorial advice a few times, and overall, I think it’s sound. Under the publish-or-perish regime incentives are for way too many papers to be produced. The quality of the science and the quality of the writing go off a cliff. If you want to get your stuff noticed and get it out there under the eyes of relevant experts, it’s gotta be punchy. Faithful to the scientific truth and not-one-iota exaggerated, but punchy. Advice taken.

So we restructured the paper to make the conceptual flow better, and punched up the writing and figures until our knuckles bled.

I have to mention too that, conceptually, this is one of if not the most difficult papers I’ve ever worked on. There's a lot of stuff in there that’s just plain hard to wrap your brain around.

By late August we were ready to resubmit the paper. The EIC sent it out for peer-review. OK so we got past the first gatekeeper…

Now’s a good point to highlight some of the peculiarities of the peer-review process. When you submit a manuscript online it’s either a request or increasingly nowadays mandatory that you provide names, titles, and contact info of three to five people who are qualified to serve as reviewers. This is a way of offloading some of the editor’s work - knowing who the experts are in a given sub-domain of research and how to get in touch with them - onto the study’s authors. But also, pragmatically, who better to know the names and contact info of eligible subdomain experts than the paper’s own subdomain expert authors?

Over the past half-century there’s been such a clamor for science research publishing that sub-domains have evolved into sub-sub-domains and sub-sub-subdomains, each with its own little fiefdom of in-the-weeds zone patrollers. For any given manuscript there may not be that many people in the world with sufficiently detailed esoteric knowledge to be able to provide a real in-depth critical robust review. And if you happen to be one such individual yourself, you probably know most or all of the others. They’re probably your colleagues and collaborators, maybe even your friends. Or alternately, your competitors.

When prompted to identify reviewers for your paper you definitely don’t want to suggest one of your competitors. They’ll most likely try to deep-six your study out of sheer self-interest if not outright steal your ideas to capitalize on themselves. That leaves the potential review pool overpopulated with people you might be a little too close with - folks who might hold a slight positive prejudice about you and your work that could bias their review.

By now you’re probably thinking “This whole peer-review thing is bogus! What’s the point of putting up with the rigamarole for something this flawed?” Reams have been written criticizing peer review - much of which I’m in agreement with and that I won’t belabor further here. Sum it up to say I’m not ready to throw the baby out with the bathwater, yet. Yes, the system is flawed and fairly riggable. The trick for the honest scholar is to rig it in favor of rigor.

When I submitted our paper last August I tagged a couple of erstwhile colleagues as candidate reviewers. These two individuals are people whom, on paper, I and coauthors are arguably a little too close with to have them review our work. A dishonest scholar would do this in order to stack the deck with friendly reviewers who are likely to skimp on rigor and waive the paper through with a glowing recommendation. I picked these particular people because I knew they would be insanely thorough, detailed, and critical. They wouldn’t see my name in the author list and “go easy” - most likely they would be even more brutal than usual!

The review process is blinded so I can’t say for sure if these two folks were among the four total reviews our paper got back. But I am 99% sure it was them. And boy was I right - picking them rigged the process for (extra-plus!) rigor.

We got the reviews back near the beginning of December. It took us a solid month of work to respond to all the comments and suggestions, line-by-line, and produce a majorly revised paper. This was an arduous, painstaking process but it 100% made the paper a lot better. Totally worth it.

Where do things stand currently? The online authors’ portal tells me the revised manuscript is “under review.” I assume it has been sent back to the same four previous reviewers to see if they are satisfied with the changes made and how we responded to the questions and concerns they raised. What could happen as a result? They could be satisfied and recommend to the editor to accept the paper. Or, further changes could be requested and another round of review instigated. The paper could simply be rejected (despite all this work!). We just have to wait and see and respond to the outcome. So it goes.

When and if it’s accepted I’ll begin the process of digesting its details into relevant book sections on this site. Let’s all keep fingers crossed and hope for the best.

Just this week I made the initial submission of the second paper alluded to at the top of this article - a study entitled Predicting organic micropollutant breakthrough in carbon adsorbers based on water quality, adsorbate properties, and rapid small-scale column tests. The cycle begins anew. This paper is pretty cool. We use the largest database ever assembled of lab bench studies paired with full-scale real-world data to develop a series of predictive equations to cover removal of a huge range of pollutant chemicals from a wide variety of different source waters by a whole bunch of different carbon adsorbents. How ‘bout them apples. We’ll have to see how the academic publishing gatekeepers feel about this one.

While I’m waiting to see what happens with that submission I’ve got the next paper on deck in my head. You are absolutely going to love this next one. This one forms the final missing link between all the peer-reviewed research locked up in paywalled journal articles and the practical hands-on tools you can use to design and operate biochar water treatment systems tailored to your specific applications. The dots will finally all be connected. The one ring to bring them all and in the darkness bind them. Can’t wait to get it out of my head onto paper and out into the world where it can do some good…

Thanks for your continued interest and support of this project. Keep the questions and feedback coming and I will do my best to respond…

Josh, Don't let the monster egos get you down. If they were true scholars, they would celebrate and constructively direct your efforts. Remember this when you get to the top, and you are called upon to judge the next generations' work. Above all, keep up the effort, some day society will thank you. This is a critical issue, you are just ahead of the curve. Dr. A. O.

Not being a scientist per se, but an avid reader of white papers and such, my two cents are in making your paper readable. Cut the contractions and use a more "stuffed shirt" approach.

I have been a grammar Nazi (ooh such a bad word!) since I was in high school (74-78) and having post WWII survivor parent's/grandparents. The three "R's" and their necessity in life were so ingrained in our minds, to this day I cringe when reading supposed peer reviewed articles for all their grammatical errors. Keep up the good work and seek a learned English Writing authority for review of your next submission.